Evaluation Queues

An evaluation queue allows you to submit Synapse files or Docker images for evaluation. They are designed to support open-access data analysis and modeling challenges in Synapse. This framework provides tools for administrators to collect and analyze data models created by Synapse users for a specific goal or purpose.

Create an Evaluation Queue

To create a queue, you must first create a Synapse Challenge project and have edit permissions on an existing project.

![]() For instructions on setting up a Synapse Project, see Setting Up a Project.

For instructions on setting up a Synapse Project, see Setting Up a Project.

If you do not see the challenge tab in your project:

Navigate to the bottom of the page and click experimental mode: off to turn on experimental mode.

Click Project Tools in the right corner and select Run Challenge and follow instructions.

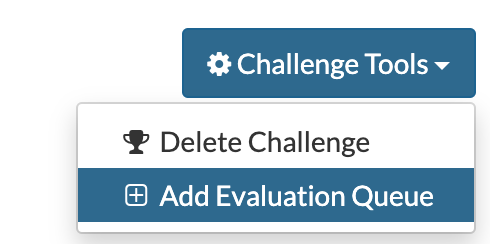

Once your project has the Challenge tab, navigate to it and click Challenge Tools in the right corner and select Add Evaluation Queue.

An evaluation queue can take several parameters that you can use to customize your preferences.

Name: Unique name of the evaluation

Description: A short description of the evaluation

Submission instructions: Message to display to users detailing acceptable formatting for submissions

Submission receipt message: Message to display to users upon submission—the name of your evaluation queue MUST be unique, otherwise the queue will not be created

Setting Quotas on an Evaluation Queue

Optionally, you can restrict submissions by adding a quota to each round of your challenge.

An evaluation queue can only have one quota. You must specify some required parameters: the length of time the queue is open, the start date, round duration, and number of rounds. It is optional to set a submission limit.

Duration (Round Start and Round end) - Select a date and time for the start and end of the round.

Submission Limit - The maximum number of submissions per team/participant per round. Please keep in mind that the system will prevent additional submissions by a user/team once they have hit this number of submissions.

Advanced Limits - You may set additional quotas for daily, weekly, or monthly submissions per team/participant. These limits can be combined by clicking the + sign next to the Maximum Submissions field.

Share an Evaluation Queue

Each evaluation has sharing settings, which limit who can interact with the evaluation.

Administrator sharing should be tightly restricted, as it includes authority to delete the entire evaluation queue and its contents. These users also have the ability to download all of the submissions.

Can Score allows for individuals to download all of the submissions

Can Submit allows for teams or individuals to submit to the evaluation, but doesn’t have access to any of the submissions.

Can View allows for teams or individuals to view the submissions on a leaderboard.

To set the sharing settings, go to the Challenge tab to view your list of evaluations. Click on the Share button per evaluation and share it with the teams or individuals you would like.

Important: When someone submits to an evaluation queue, a copy of the submission is made, so a person with “Administrator” or “Can Score” access will be able to download the submission even if the submitter deletes the entity.

Close an Evaluation Queue

While there isn’t technically a way of “closing” an evaluation queue, there are multiple ways to discontinue submissions for users.

Users are only able to submit to a queue if they have

can submitpermissions to it. If you have the ability to modify the permissions of a queue, you will still be able to submit to the queue due to youradministratoraccess.If the quota is set so the current date exceeds the the round start + round duration, no one will be able to submit to the queue. This includes users with administrator permissions.

Deleting a queue will also discontinue the ability to submit to it. Be careful when doing this, as deleting a queue is irreversible and you will lose all submissions.

Submitting to an Evaluation Queue

Any Synapse entity may be submitted to an evaluation queue.

In the R and Python examples, you need to know the ID of the evaluation queue. This ID must be provided to you by administrators of the queue.

The submission function takes two optional parameters: name and team. Name can be provided to customize the submission. The submission name is often used by participants to identify their submissions. If a name is not provided, the name of the entity being submitted will be used. As an example, if you submit a file named testfile.txt, and the name of the submission isn’t specified, it will default to testfile.txt. Team names can be provided to recognize a group of contributors.

Python

import synapseclient

syn = synapseclient.login()

evaluation_id = "9610091"

my_submission_entity = "syn1234567"

submission = syn.submit(

evaluation = evaluation_id,

entity = my_submission_entity,

name = "My Submission", # An arbitrary name for your submission

team = "My Team Name") # Optional, can also pass a Team object or id

R

library(synapser)

synLogin()

evaluation_id <- "9610091"

my_submission_entity <- "syn1234567"

submission <- synSubmit(

evaluation = evaluation_id,

entity = my_submission_entity,

name = "My Submission", # An arbitrary name for your submission

team = "My Team Name") # Optional, can also pass a Team object or id

Submissions

Every submission you make to an evaluation queue has a unique ID. This ID should not be confused with Synapse IDs which start with the prefix “syn” (for example, syn12345678). All submissions have a Submission and SubmissionStatus object.

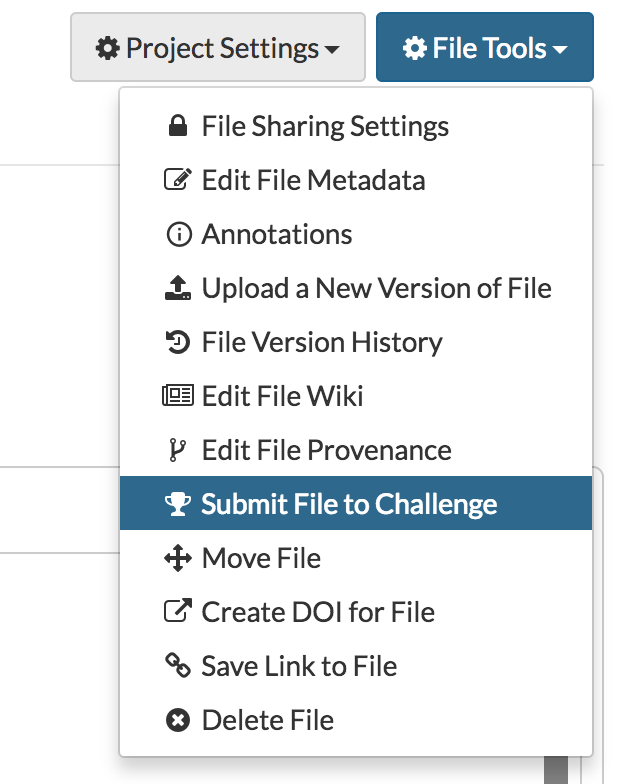

Navigate to a file in Synapse and click on File Tools in the upper right-hand corner. Select Submit To Challenge to pick the challenge for your submission. Follow the provided steps to complete your submission.

View Submissions of an Evaluation Queue

Submissions can be viewed and shared with users through submission views creating dynamic leaderboards. Submission annotations can be added to a SubmissionStatus object and are automatically indexed in the view.

Creating the Submission View

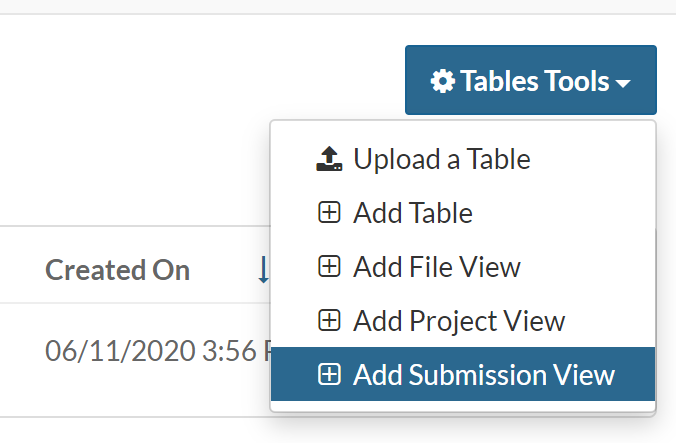

Navigate to the Tables tab and under the Table Tools menu in the upper right-hand select Add Submission View:

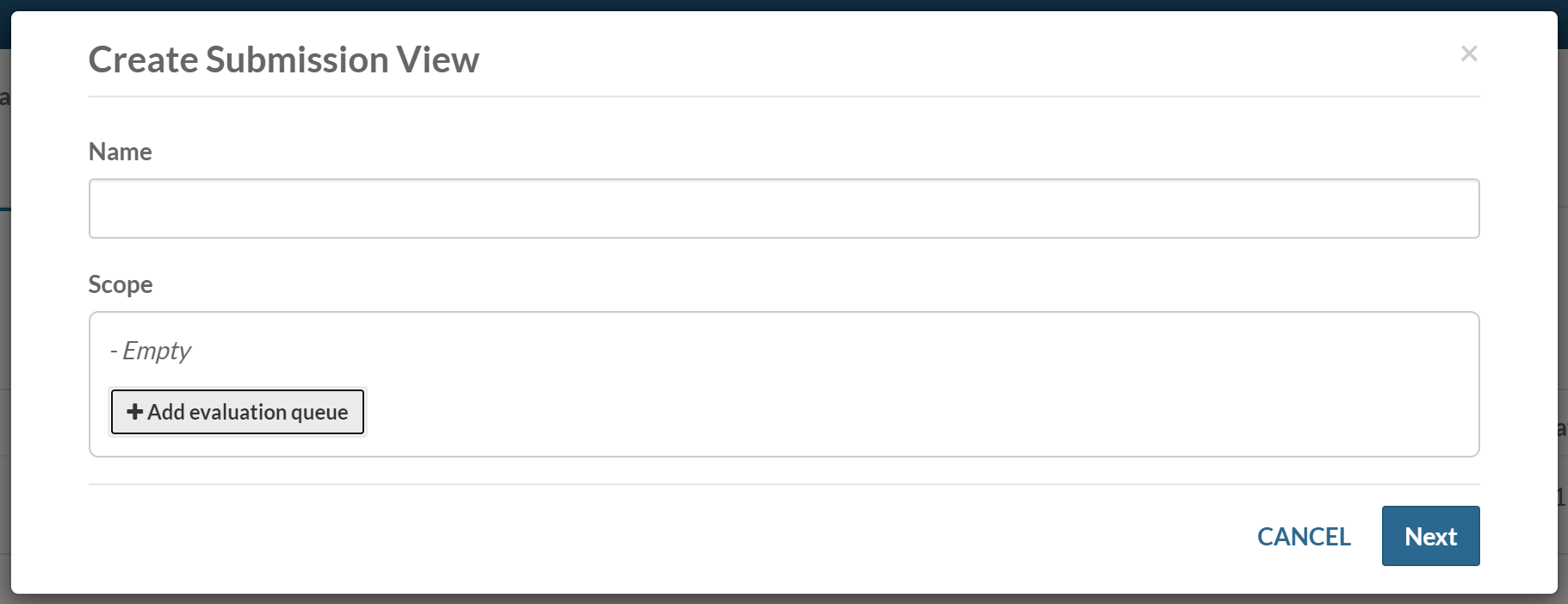

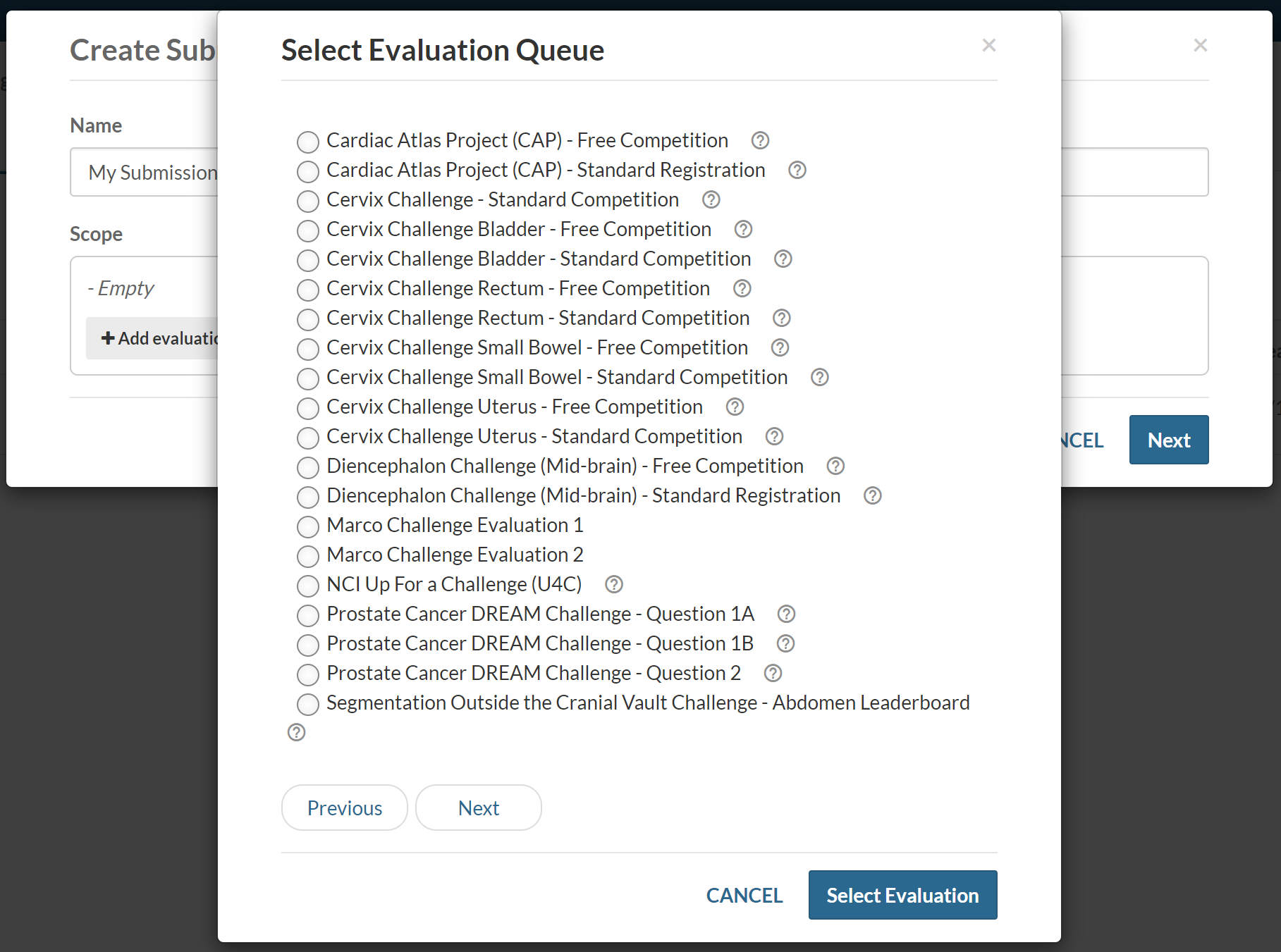

You can name the view, and select the evaluation queues to include in the scope.

You can add multiple evaluation queues to the scope:

Note: You must be an administrator of each selected evaluation queue to create the view.

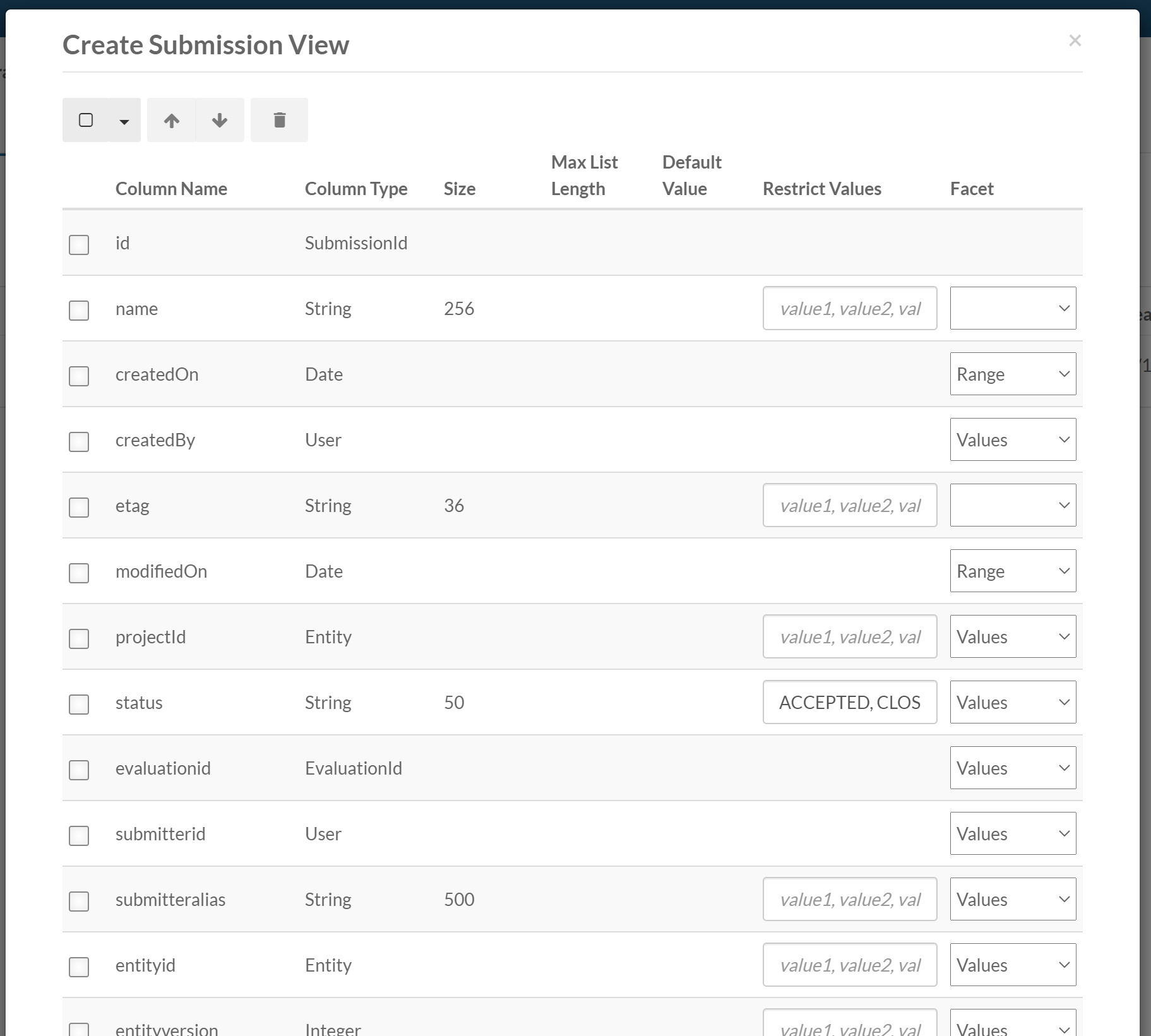

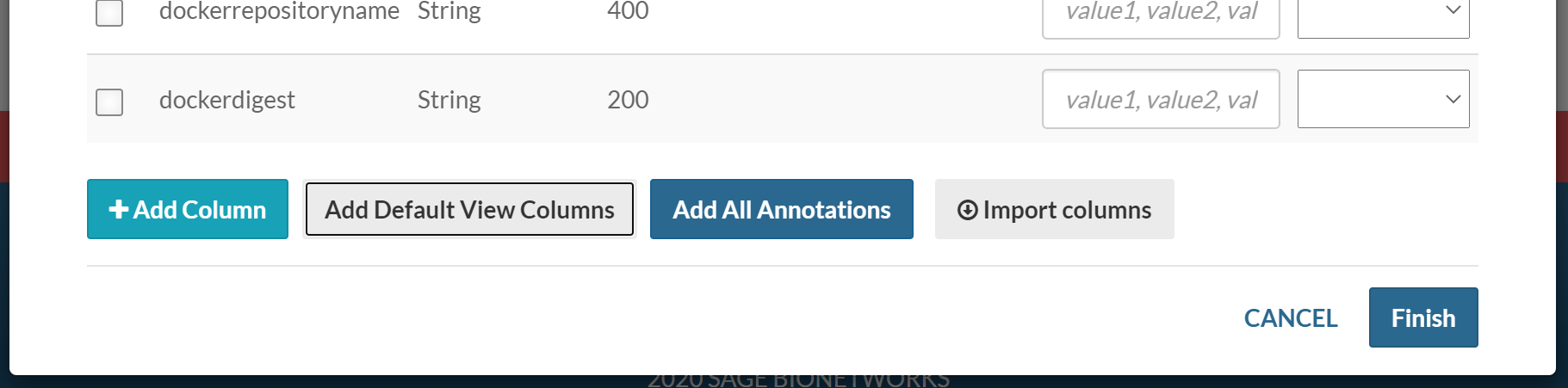

During the creation process the default columns for a submission view will be included:

Selecting Add All Annotations will automatically include all the annotations found on the submissions in the scope as columns for the view:

Embed a Submission View in a Wiki Page

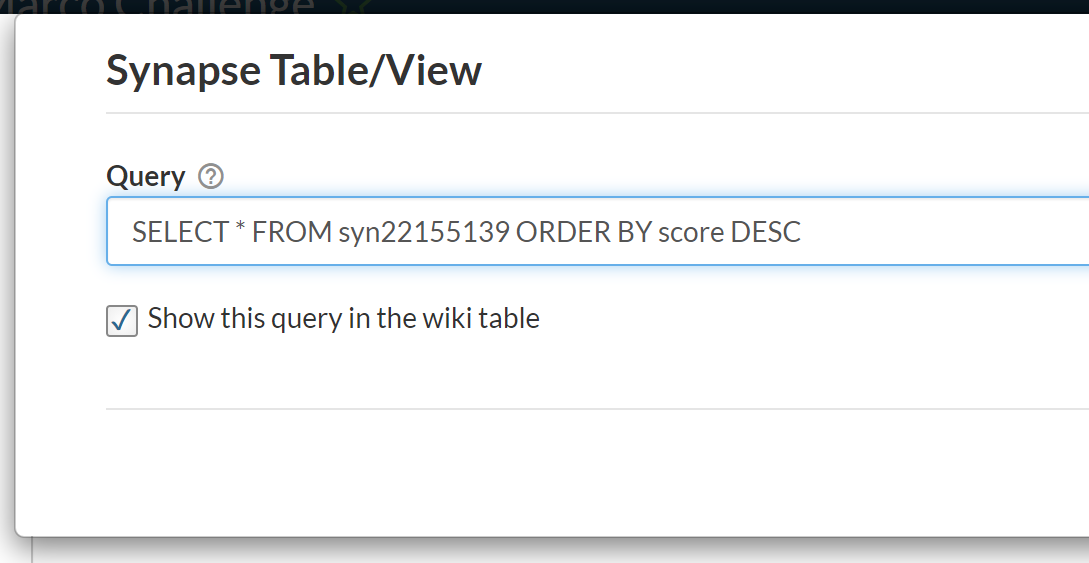

Once created, a submission view can be embedded into a wiki page using the Synapse Table/View wiki widget:

You can input your own query statement such as SELECT * FROM syn22155139 ORDER BY score DESC. Remember, syn22155139 should be replaced with the synID of the submission view:

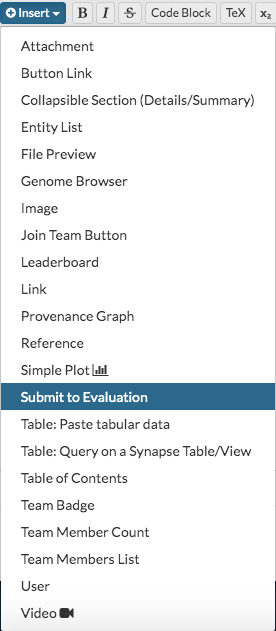

Submit to an Evaluation Queue from a Wiki Page

You may embed a Submit To Evaluation widget on a wiki page to improve visibility of your evaluation queue. The widget allows participants to submit to multiple evaluation queues within a project or a single evaluation queue.

Currently, this wiki widget is required to submit Synapse projects to an evaluation queue. Synapse Docker repositories can not be submitted through this widget.

The “Evaluation Queue unavailable message” is customizable. A queue may appear unavailable to a user if:

The project doesn’t have any evaluation queues.

The evaluation queue quota is set such that a user can not submit to the queue.

The user does not have permission to view a project’s evaluation queues.

![]() Learn more about sharing settings here.

Learn more about sharing settings here.